To compare the test case results with the expected results you need to run the test scripts for a rulebase. A report will the be generated which shows the results.

To run a single test script:

To run multiple scripts for a rulebase:

NOTE: You should re-run your test script/s whenever the rulebase changes to guarantee that the results are still correct.

After a test script has run a tab will open in the top right hand pane in Oracle Policy Modeling which shows the Test Report.

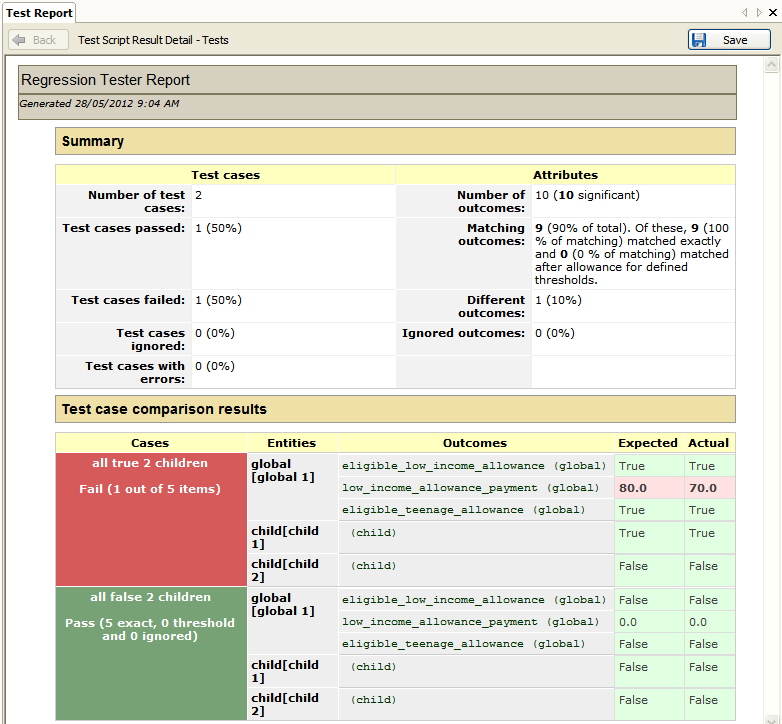

An example of a Test Report for an individual test script is shown below. The report contains two sections: a summary of the report and the test case comparison results. There will also be an additional section for Errors if any are encountered during the running of the script.

Test cases that pass are highlighted in green and test cases that fail are highlighted in red.

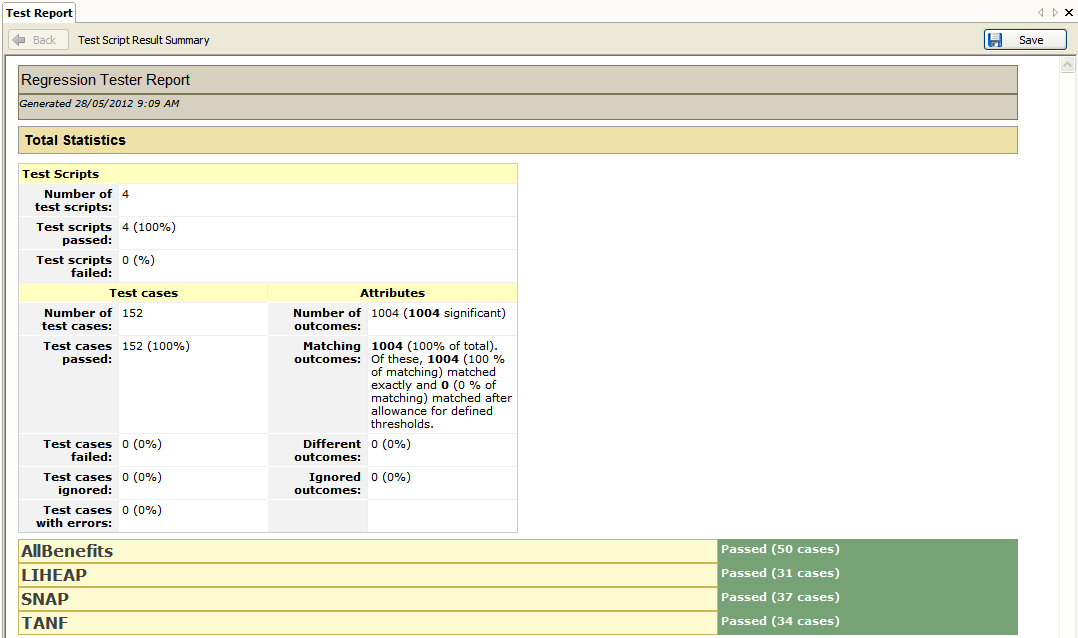

If you have selected multiple test scripts to be run, the Test Report will open to a Test Script Result Summary. This show the Total Statistics for all the test scripts at the top of the report, and individual reports can be viewed by clicking on the links below this.

To navigate from individual reports back to the summary view, you click the Back button at the top left of the Test Report tab.

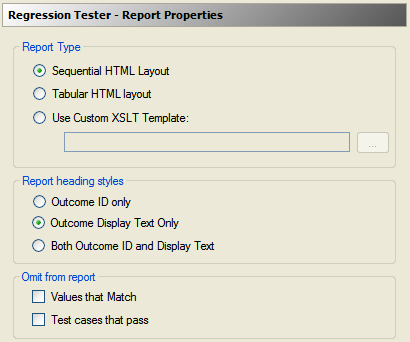

Reports can be customized by changing the report options in File | Project Properties | Regression Tester Properties | Report Options.

The options in this dialog box are explained below:

| Setting | Options |

|---|---|

| Report type |

The Test Report can be rendered in two distinct layouts – sequential or tabular.

Alternatively, you can specify a custom XSLT template for the regression tester to use when generating the Test Report. |

| Report heading styles |

There are three options for report headings:

|

| Omit from report |

You have the option to omit from the Test Report:

|

You can save a test report by clicking the Save button at the top right of the Test Report tab.

If the Test Report is for an individual test script, the report will be saved as a HTML file.

If the Test Report contains multiple test script reports, you have the option to save the report in XML or HTML format. You need to specify a folder where the summary and individual report files will be saved to.